AI will make you 10x faster. That is the promise I have heard. I’ve also heard that AI will turn non-technical people into technical people. Or more accurately, lower the bar for app development.

But the most important thing I know about AI (and this thought is by no means original to me!) is that you can’t know what AI can do, untill you work with it.

Consequently, I embarked on a new project with the expectation to be 10x faster. This project is my public repository, and I’ll write how many hours it took later in this blog.

The Project

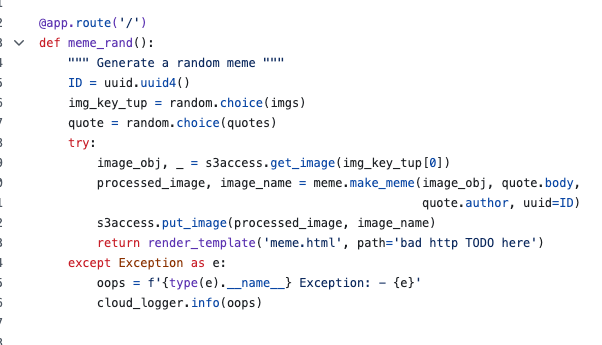

The project is a machine learning related web application. A user will click through a series of randomly chosen fantasy character portraits (thank you, Nexus Mods!) and label their binary gender (this is about KISS principle, not about erasure of the non binary genders!). Then, with scikit-learn, we’ll train a model on the collection of images and gender, and see how it goes.

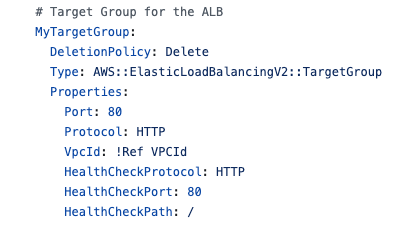

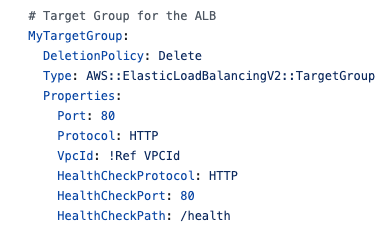

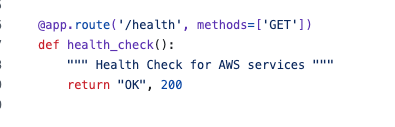

For deployment, this will use AWS and deploy through Github. Resources used will be Fargate, s3, lambdas, an autoscaler, a load balancer, an RDS database, etc. Terraform will be used for Infrastructure as code.

I picked this project because it combines tech I know extremely well (Python, AWS, SQL), tech that is new to me, but I’m comfortable with (terraform), and tech that I am far from an expert in (Docker, Machine Learning, nginx)

What AI services Are to Be used?

I thought I’d check out v0 by Vercel, Cursor, and Google’s Gemini for this project. None of these are paid versions. I also did not rely on them for the same tasks, or equally. Anything I say about their merits is therefore limited.

How were these services used? I started with Google Gemini and described the machine learning problem I wanted to solve. My question was intentionally broad, “How can I go through hundreds of images, and label them according to a category (e.g. ‘dog’ or ‘cat’)? I want to use python and scikit-learn.” Google Gemini guided me a model I’ve seen before (Logistic Regression). It further explained that I would need to pre-process images into numpy arrays. I asked follow up questions, telling that not all of images had the same dimensions. It followed up with suggestions on padding with scimage.

I used V0 to make an outline of the project. I asked it for “give me a flask project that shows and image. Beneath the image is a button for ‘male’ or ‘female’. Put that project in a folder that is called ‘web-app’…” and so forth. I then asked it to provide CSS “like a website from 1998”. Then I asked it for a few Terraform files “to deploy this like it will got ECS Fargate, and make the cheapest possible EFS for storage” and “can you write a JSON object for the IAM permissions that are required to deploy this” etc. I also asked it to remove Javascript from Flask app, simply to see if it could refactor.

Finally, I downloaded the V0 project as a zip file, unzipped it, made several manual changes and created a git repo. Then, I relied on Cursor afterwards. I asked Cursor what it thought the project was attempting to do, and it gave a correct answer and wrote some documentation. I asked it to create .tf files for a Lambda and a corresponding Lambda file in a specific directory. It did so. The same went with other Docker files, updates to IAM permissions, more .tf files.

Cursor wrote the GitHub workflows. These are flows I read and understand, but have never written myself. I described questions like “I’d like to write a check for the PEP8 standards. It should run on any pull request into main, and refuse the pull request if any Python file fails the PEP8 test.”

The project proceeded from there in Cursor.

Great Results from AI

V0 impressed me. The easy-to-understand interface didn’t only write the correct code, but it also arranged the project’s folder structure in the way I had asked it to. I did this a few other times as well, without prompting it for a folder structure, and it produced results that were sensible and understandable.

The Flask application CSS did change as I asked: it produced a 1998-style website, in all of its nostalgic hideousness. It evoked the early web fascination, like a large image load over a mere 30 seconds! I might have asked it to simulate a 56k dial up hiss next.

Gemini assisted brainstorming like a subservient, technically astute, butler. If I asked a question that I knew would be ‘the wrong’ question (“What is the best machine learning model for image categorization?”), it answered rightly that there is no ‘best option’ and summarized the advantages of some models over others. I advised it that was concerned about too much memory usage in a single ECS task. It offered me options of training in batches or training in parallel. It provides streams of coding examples for both.

Were the answers from Gemini the best answers, or did it hallucinate? That I can’t answer. Scikit-learn is something I’ve used for less than a year. Still, its answers were fluid, helpful, and offered ideas to investigate.

Cursor, where I did the bulk of the editing, proved useful in both brainstorming and coding. I checked what it would say if I asked “should I store numpy arrays in S3 or EFS? I don’t expect them to be accessed continuously, but I will need to train a model with them. That model will run in ECS.” It gave out a reply I thought made sense: EFS is much more expensive than S3, and has the advantage of lightly coupling containers from Amazon Services. It could be shared as common network drive across several ECS tasks. It wound up recommending S3 due to cost, especially since I also told it I did not plan on training a model across parallel containers.

The writing of novel code also impressed me. When I asked it for a Lambda or a Github workflow, it wrote with certain patterns I didn’t not recognize. I asked it questions like “why did you institute that variable as ‘None’ on line 12?” or “why are the Terraform Plan and Terraform Apply commands separate”? It politely tutored me on best practices, explaining why these design patterns were common. Finally, I was able to update my workflows without any documentation reading. I asked cursor to disable or reenable runs on pull requests, pushes etc and it modified them correctly.

As my project grew, it did reasonably well at managing IAM permissions, particularly when it came to consolidating and organizing policy documents. More on that later though.

What Cursor Didn’t do so Well

I love code that is clean and easy to read. PEP8 helps me keep it that way. Cursor had a different opinion on all that. I wrote broad prompts like “re-format this file for pep8 standards” or even “run pycodestyle, and correct any formatting errors.” Neither worked. The latter test surprised me the most. The pycodestyle command is lucidly blunt about what you need to fix. Cursor could never get it done efficiently, and I continue to check PEP8 manually.

Cursor responded to a prompt such as ‘make a reverse proxy in this folder. Make sure it has Docker file. Also, use gunicorn to handle the headers.’ I expected the simplicity I had seen through a Udemy course. What I got was not exactly that, of course, but it was flawed. Cursor placed header information in both the Docker file and the related gunicorn file, which caused errors at deployment.

Another Docker related example was this: I got errors in which a Docker file could not find a related file to import when I deployed it, even though it built fine from a terminal command. Cursor suggested a fix that involved going several directories up, and performing a recursive search for the apparently absent file. Turns out the error was in my Deploy.yml. I had the docker build command ending in ‘.’ as opposed to the intended ‘..’ -making this a remarkably simple error to identify and fix.

These are only two examples in which Cursor built a Rube Goldberg machine to solve an issue, when best practices call for Occam’s Razor. With these occasional Rube Goldberg solutions, Cursor proved as error-prone as any human. A change in one file to fix one problem caused a break in functionality in another file, causing another problem. I learned to ask Cursor to double-check, sometimes with colorful language.

Cursor proved incredibly time saving when it came to IAM permissions. It was great at analyzing them and refactoring them as needed. But I did notice a flaw: it didn’t seem to follow least privilege consistently. Frequently, the first suggestion for permissions invoked the dreaded asterisks on resources. Furthermore, it was almost never accurate it predicating all the permissions that would be needed to deploy a resource. Even prompts like “Make sure the resource has read permissions, to the s3 bucket. Apply write permissions to only keys that begin with ‘write-directory'” would miss critical permissions.

Closing thoughts

Did AI make me 10x faster at making this project? At the time of this writing, I’ve put in about 16 hours of work into it, and I estimate it is about 60% complete. It did make things faster, but not to such an astronomical degree. If you’d like me to set up a GeoCities site, with some raw JavaScript, that can be finished before my Napster finishes a download, though.

Will AI turn a technical person into a non technical person? Qualified yes, if that non-technical person wants to use AI as a teaching aide, rather than a skill replacement. If you are non-technical person, ready to deploy a cool app to AWS cloud, consider this: when I first learned cloud development, I somehow made a git commit that publicly exposed (non-root) AWS access credentials. Next, I got a surprise $635 dollar bill. Amazon had disabled the compromised IAM user. They forgave the bill, but I still went region by region, resource by resource, to ensure nothing was left running. This is one of hundreds of ways thing can go wrong if you don’t know what to ask for.

This leads to another point. AI can give you ideas, recommendations, explanation of trade offs, but it cannot make decisions for you. I chose to deploy this project with Fargate because it was simple (see above, KISS method), but that decision was pretty unconstrained. I’m not considering pre-existing infrastructure, as one would in a professional environment. I am barely considering the cost too. I would under no circumstances trust an AI to tell me “how much will running this project cost?” or “will Fargate be costlier than EKS?” because it likely will hallucinate the math. It would flatter me with “great question!” while doing so.

There is one final thought though. Even though AI did not make this project exponentially faster to deploy, it did make it overall easier. As I debugged and re-deployed, it was nice to have an integrated terminal to ask questions, rather than have four browser tabs open between official documentation, stack overflow, a repo of sample code etc. If I updated or introduced a variable name, I did not have to scour my code for every single reference either. I also grew in skill as I asked it questions about why it structured code a certain way. It answered questions when I didn’t understand a particular error in deployment, a cloud watch log, or even locally.

The explanations have always been fruitful, even if I make mental notes to double check for accuracy.

Thanks for reading, and remember, this is only one developer’s experience!